AI and dinosaurs

Have you ever noticed how almost every kid goes through that intense dinosaur phase? You know the one. Suddenly, your five-year-old nephew can rattle off facts about the size, speed, and power of a Tyrannosaurus rex or Velociraptor as if he just graduated with a degree in paleontology. It’s impressive at first – until you realize he’s really just memorizing dinosaur names and random stats from a book or a TV show.

But in his mind? He’s unstoppable. Armed with this newfound knowledge, he doesn’t just know about dinosaurs; he becomes one, in a way. He projects their strength and power onto himself. It’s like he’s transformed into a mini-dinosaur expert, ready to take on the world. In reality, though, he’s still just scratching the surface – because memorizing cool dinosaur facts is a far cry from the in-depth understanding of a real paleontologist.

Funny enough, the same thing is happening right now with AI.

In recent months, it feels like we’ve been overrun by a wave of self-proclaimed AI experts. Everyone’s suddenly talking about machine learning, algorithms, and—my favorite term of all: prompt engineering. It’s almost like AI is the new T-Rex, and everyone wants to claim they’ve mastered it. People throw around buzzwords, mention how they’ve cracked the code on getting chatbots to do what they want, and present themselves as cutting-edge specialists in a field that, let’s be honest, is more complex than most of us realize.

Here’s where things get interesting: like the kid who knows all the dinosaur names but doesn’t quite understand how fossils are dated or what really makes a species go extinct, many of these so-called AI experts are only skimming the surface. They might know how to ask an AI tool a question or get it to spit out a clever response, but that doesn’t mean they understand how it all works under the hood. They’re not engineers, data scientists, or machine learning specialists who deal with the actual nuts and bolts of AI on a daily basis.

And yet, much like our dinosaur-obsessed kids, they don’t see it that way. There’s this sense of empowerment – they feel that by wielding these tools, they’ve somehow absorbed the power of the AI itself. They see themselves as being on the cutting edge, even though what they’ve really done is mastered the equivalent of a surface-level trivia game. Knowing a bunch of facts about dinosaurs doesn’t make you a paleontologist, and knowing how to get an AI tool to generate some interesting content doesn’t make you an AI expert.

But here’s where it gets tricky: in both cases, that surface-level knowledge is enough to make someone feel competent; sometimes overly competent. The term prompt engineering is a great example of this. It sounds complex and technical, but in many cases, it’s just about phrasing questions in a way that gets the response you’re hoping for. There’s no deep understanding required, but it’s packaged up in a way that makes it seem like a specialized skill. The result? A lot of people walking around feeling like they’ve tapped into the secrets of AI, when really, they’re still hanging out in the sandbox.

Meanwhile, the real experts—data engineers, machine learning specialists, and those who understand the math and algorithms that drive AI—are doing the equivalent of the hard, paleontological work. They’re the ones handling big data, running complex models, and troubleshooting problems most of us wouldn’t even know where to start with.

But just like a kid roaring around the living room pretending to be a T-Rex, the self-proclaimed AI experts are having a great time projecting all that AI power onto themselves. And honestly, can you blame them?

I don’t deny that someone needs to explain users how to use tools. But I have two issues here:

- The focus on “THIS ONE TRICK”- posts shifts the responsibility for success to the user, who gets a one-size-fits-all-tool, that they are now encouraged to use for specialized jobs. To master these, they need to tweak the tool and follow lots of “tricks”, download “cheatsheets” and read “whitepapers”. That’s now how I envision a modern workplace. Yes, training and adoption are extraordinary important for software success, but what we see right now is going into the wrong direction. WE need to provide users with specialized tools (I talked about this in this post: ) and then teach them when to use what.

- The way we are flooded by self-proclaimed specialists that barely scratch the surface makes it appear as if everyone is an authority in AI. Let me be very clear here: I use and build AI tools (way beyond hooking an LLM into a customer bot) and still I don’t claim to be an expert.

At this point I am bored by all the dinosaur AI- related LinkedIn posts that promise you that ONE trick that will make chatGPT transform your work. In the meantime, you just suffer through a tuesday full of back-to-back meetings (but luckily, the double bookings are now recorded, transcribed and tasks have been jotted down by Copilot so there is no excuse anymore to not catch up) and wait for the transformation.

What’s your take?

You May Also Like

Why you shouldn’t say 'please' or 'thank you' to AI (and why it matters)

We’ve all been there: asking ChatGPT, Copilot or whatever AI, for something and instinctively saying “please” or “thank you.” It feels polite, right? But AI doesn’t care. Talking to it like it’s a …

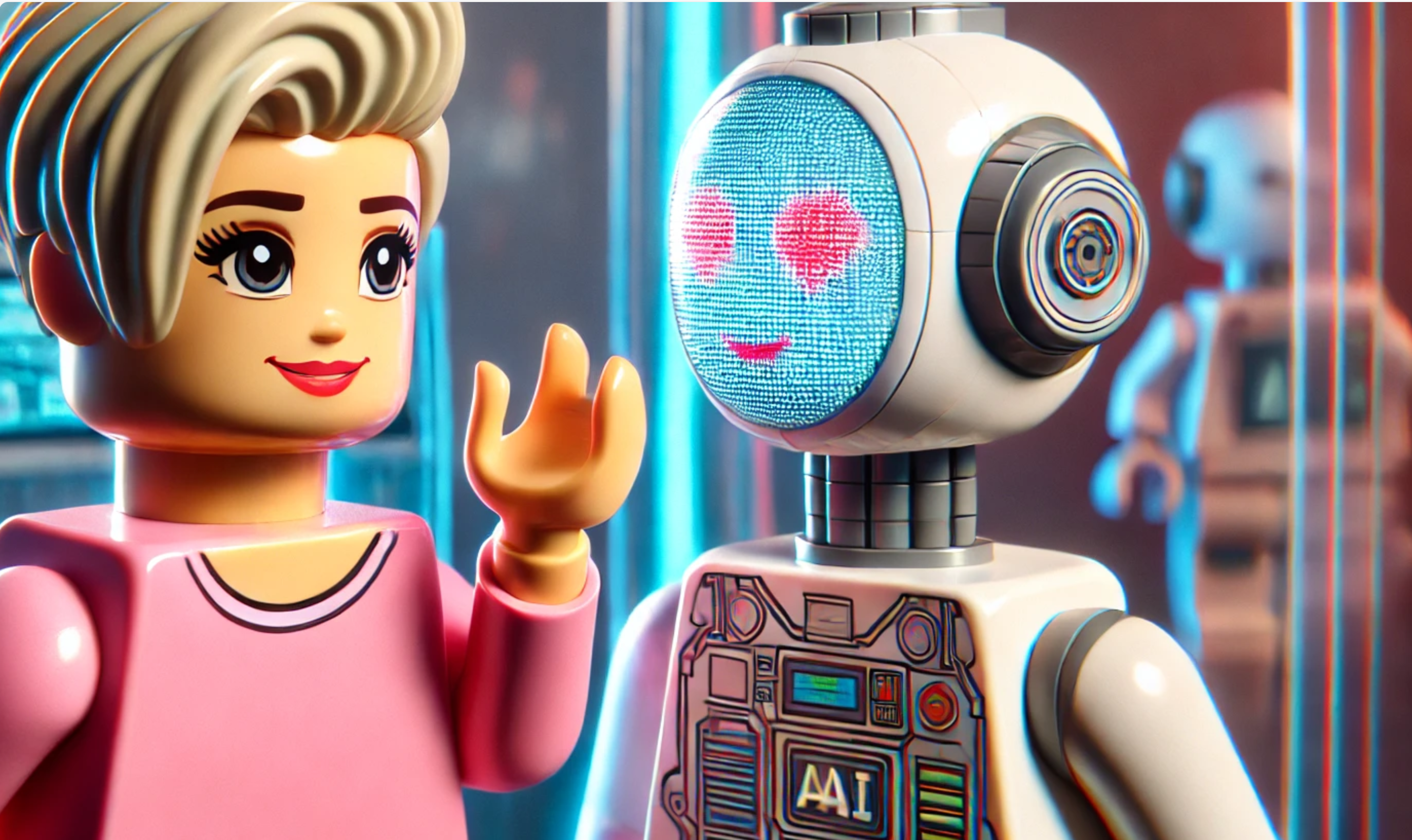

Hasta la vista?! About how we picture AI

Ever searched for “AI” on a stock image site or even used an AI tool to generate an image of “AI”? I can’t help but noticing three recurring themes: robots that look like the Terminator …

AI can now REASON?! tl;dr: no, it cant!

Another day, another AI model drop! This time, it’s the OpenAI o1 series, and wow, the hype is all over my feed 🙄 Is this the breakthrough in reasoning we’ve all been waiting for? 🤔 OpenAI …