Org chart is cheap, show me the relationships

tl;dr

AI can read every policy, spec, and wiki page in your company; it even knows the formal org chart. But it doesn’t feel the informal paths: the backchannels, the trust networks, the unspoken approvals. Institutional knowledge lives in the spaces between people. When you drop an AI agent into an organization and expect it to “just know”, it plays the wrong game. Map the relationships first; then let AI augment the humans who already run them.

The illusion of instant expertise

There’s something deeply appealing about the idea that AI could replace the messiness of human memory. If we could just ingest all the project notes, the documented processes, the internal wikis, maybe even throw in the Slack/Teams logs for good measure, surely we could replicate what the experts in the building know. (I will happily leave out the problem that usually, knowledge is rarely (well) documented, that processes are unclear/broken, and that people fight political battles in hierarchal structures; some to thrive, some to just survive this quarter)

The problem is: We’re conflating access to documentation with access to understanding. Reading what happened is not the same as knowing how it happened, or why. And it definitely doesn’t mean you can replicate it. The fantasy is that institutional knowledge is just poorly indexed content. But most of it was never written down in the first place. It lives in offhand comments, hallway deals, late-night decisions, subtle workarounds, and emotional memory, the kind that says, “Yeah, that diagram says A, but trust me, talk to Maria first.”

AI can scan the documentation, but it won’t sense the tension in a planning call. It can retrieve the slide deck, but it doesn’t know that slide was a decoy for a much harder conversation that never made it into writing. It can suggest a process, but it won’t understand the invisible compromises that hold it together.

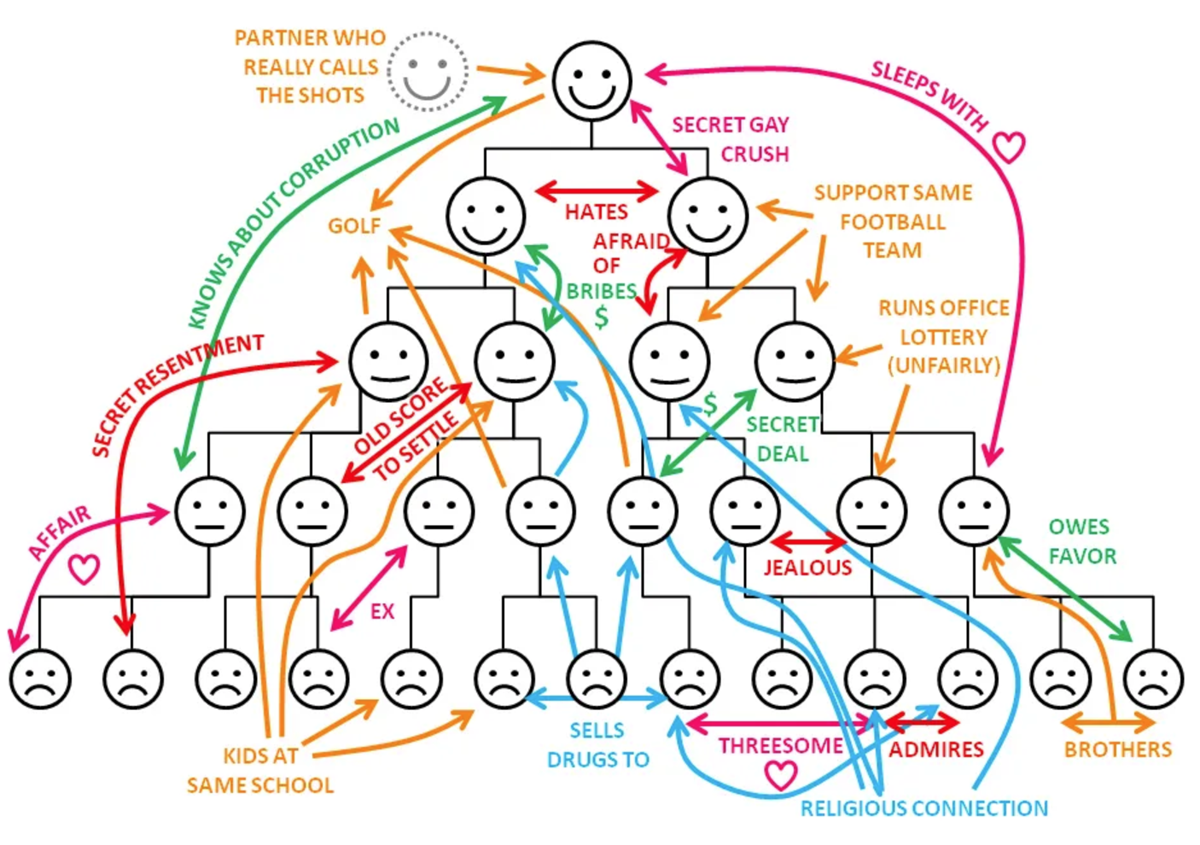

The real org chart

A few years ago, a cartoonish diagram started circulating in HR departments. It showed a smiling org chart turned sideways by arrows of resentment, secret crushes, backchannel deals, and long-forgotten favors. People laughed; some a little too hard. It stuck because it captured something everyone knew but no one ever documented: that real power, real trust, and real influence don’t follow reporting lines - and they are never written down.

Every company has someone who technically reports to someone else, but in practice, calls the shots. Every dev team has a person who isn’t the architect but whose opinion the architect won’t ship without. Every meeting has the person whose silence speaks louder than the project sponsor’s cheerleading.

Sometimes it’s the executive assistant who knows exactly when to schedule a meeting so it gets the right attention. Sometimes it’s the long-tenured employee who can de-escalate a cross-team conflict with a single sentence. These aren’t exceptions; they’re infrastructure. The kind no system-of-record captures. When someone says, “Let me check with them first”, you’re seeing the real org chart at work. And AI, no matter how much data you feed it, doesn’t feel those dynamics.

Why ai stumbles

AI struggles in knowledge work because it assumes the structure is the truth. It assumes that if you feed it the correct inputs, it will produce the correct answers. But knowledge work is full of context, ambiguity, and shifting expectations.

Let me give you an example: Language models score probabilities; humans score trust. When someone gives you a nuanced answer to a politically charged question, you’re not just hearing the words. You’re reading between the lines, tracking facial expressions, body language, tone. You’re also remembering how they handled a tough decision two years ago. AI misses all of this.

Even if you give it access to all the right content (remember, that’s a whole different challenge!), you’re working with static context. An org’s knowledge graph changes faster than its documents. Today’s team lead becomes tomorrow’s problem child. The person who used to run things steps back. Someone else quietly steps up. Your AI won’t keep up with the gossip, and it definitely won’t sense that a document is technically accurate but politically obsolete.

There’s also the Conway problem. Conway’s Law tells us that systems mirror the communication structures of the teams that build them. If you don’t know who actually talks to whom, your automation will lock in the wrong assumptions. AI solutions built on misread maps only deepen the disconnect.

And finally, AI is blind to the informal networks. The people who connect teams, translate between departments, or just know who to talk to, they rarely show up in org charts. These are your bridge people. They have no formal mandate but hold immense influence. If you don’t account for them, your AI will repeatedly miss the mark.

What we actually need

We need to stop trying to replace knowledge workers with AI and instead design tools that help them be more effective. That starts with respecting how knowledge actually flows in an organization. Don’t build systems that expect to learn everything from one big data ingestion. Most of what matters isn’t written down, and what is written often lacks the nuance to be interpreted safely without context. Instead, build opt-in systems that let people gradually contribute and curate the knowledge they hold. Systems that understand the value of context, timing, and consent.

Instead of mapping roles, map relationships. Knowing that Alex is the “Product Owner” doesn’t tell you much. Knowing that Alex always defers to Jamie on architectural questions, despite having the final say on paper, tells you a lot. Track who people actually turn to for help, advice, or unblocking. The connectors. The mentors. The quiet experts. These are the nodes an AI assistant needs to understand if it’s going to provide value without stepping on toes.

And above all

Stop framing AI as a cost-cutting tool. Frame it as a knowledge amplifier.

AI is something that helps humans find the right person faster, spot inconsistencies earlier, or prep better for meetings. Let people stay in charge. Just give them better tools.

Making it real

So how do we build AI that respects the real org chart? Start with relationship mapping. Not in a creepy surveillance way, but in the spirit of transparency and collaboration. You don’t need to trace every coffee chat. But there’s a big difference between “the dev team” and “the three people in the dev team everyone trusts with hard questions”.

Find those people. Talk to them. Ask not what they do on paper, but how they get things done in practice. They’ll tell you where the bottlenecks really are, who smooths things over when teams fight, and where decisions get quietly reversed before they hit the roadmap.

Then, build your AI around those realities. If someone always edits the architecture document after it’s approved, make sure the AI knows not to recommend it without checking with them. If two people interpret the same policy differently, your assistant shouldn’t pick one; it should flag the conflict and suggest a conversation.

And don’t measure success by usage alone. Measure trust. When people rely on an AI suggestion, do they feel it helped? Do they come back? Or do they start ignoring it because it keeps missing the point? Usage can be a vanity metric. Trust is what determines whether AI becomes a partner or a nuisance.

Closing thought

Knowledge work is deeply human. It’s made of trust, shortcuts, conflict, and compromise. If your AI doesn’t know who people trust, who they avoid, who they quietly run things by before making a call, it doesn’t know enough. The real org chart isn’t in the HR system; it’s in the relationships. Start there.

PS

If you are still on the “hey Copilot whats our travel policy” level, lets talk to get you out of that chatbot-jail. AI can do so much more.

You May Also Like

Copilot Studio: Part 3 - The cost of (in)action – what you’re really paying for with Copilot Studio

The real cost of Copilot Studio isn’t in licenses—it’s in what happens when organizations delay, overthink, or quietly underinvest. This post explores the operational drag of bad agent design, brittle …

Assumption is the mother of all fuck-ups

This post is a look inside the workshops I run with clients; where we surface the assumptions quietly shaping our software and challenge them before they blow up in production. From …

Copilot Studio: Part 2 – Copilot Studio agents: the ALM reality check

Clicking 'Publish' doesn’t mean your agent is live; it means it’s exposed. This post cuts through the noise around Copilot Studio and lays out what a real deployment looks like: solutions, …